VPN Sidecar Containers

Intro

For security reasons or to gain access to a remote network you might want to give specific Kasm Workspaces access to a VPN. Now you could customize our existing core containers with VPN tools, but then it requires many network level permissions and becomes a configuration nightmare. Our recommended configuration would be to use an off the shelf or custom VPN docker container running alongside Kasm Workspaces while leveraging our existing desktop and application containers. Their traffic can then be forced through the external VPN container using a custom Docker exec config. In this document we will be covering three different ways to achieve this.

Deployment time ~10 minutes.

Option 1 roll your own openvpn setup

For this configuration we are going to be using an off the shelf OpenVPN container for the server as the assumption is most users will allready have an OpenVPN endpoint available. Then we can build a custom client container to act as the sidecar.

The OpenVPN server

Here we are using kylemanna/openvpn to be able to quickly spin up an endpoint on a remote server. Use the following commands to deploy and dump a useable client config file:

sudo docker volume create --name Kasm_vpn

sudo docker run -v Kasm_vpn:/etc/openvpn --rm kylemanna/openvpn ovpn_genconfig -u udp://IP_OR_HOSTNAME

sudo docker run -v Kasm_vpn:/etc/openvpn --rm -it kylemanna/openvpn ovpn_initpki

sudo docker run -v Kasm_vpn:/etc/openvpn -d -p 1194:1194/udp --cap-add=NET_ADMIN kylemanna/openvpn

sudo docker run -v Kasm_vpn:/etc/openvpn --rm -it kylemanna/openvpn easyrsa build-client-full Kasm nopass

sudo docker run -v Kasm_vpn:/etc/openvpn --rm kylemanna/openvpn ovpn_getclient Kasm > Kasm.ovpn

Please substitute the IP_OR_HOSTNAME for the actual server IP or hostname for your server. After filling out the prompted information for password protecting the certificates you will be left with a Kasm.ovpn configuration file needed to connect your sidecar client.

The OpenVPN sidecar container

On your Kasm Workspaces host you will need to build a custom docker container to act as our VPN sidecar container.

mkdir openvpn-client

cd openvpn-client

mkdir root

Here is our Dockerfile:

FROM alpine:3.15

RUN \

apk add --no-cache \

bind-tools \

openvpn

# add local files

COPY /root /

VOLUME [ "/vpn/config" ]

ENTRYPOINT [ "/entrypoint.sh" ]

And our custom root/entrypoint.sh:

#! /bin/sh

# create tun device

if [ ! -c /dev/net/tun ]; then

mkdir -p /dev/net

mknod /dev/net/tun c 10 200

fi

# Enable devices MASQUERADE mode

iptables -t nat -A POSTROUTING -o tun+ -j MASQUERADE

# start vpn client

openvpn --config /vpn/config/${VPN_CONFIG}

Make sure our entrypoint is executable:

chmod +x root/entrypoint.sh

Your folder should look like this:

openvpn-client/

├─ root/

│ ├─ entrypoint.sh

├─ Dockerfile

Now build the container:

sudo docker build -t openvpn-client .

On the Docker host running Kasm Workspaces (or Agent Server if using a Mult-Server Deploy) we need to create a custom docker network:

sudo docker network create \

--driver=bridge \

--opt icc=true \

--subnet=172.20.0.0/16 \

vpn-1

And finally navigate to the folder containing your Kasm.ovpn config file and run the container:

sudo docker run -d \

--cap-add NET_ADMIN \

--name open-vpn \

--net vpn-1 \

--ip 172.20.0.2 \

-e VPN_CONFIG=Kasm.ovpn \

-v $(pwd):/vpn/config \

--restart unless-stopped \

openvpn-client

With the sidecar container running please skip ahead to Customizing Workspaces

Option 2 using a popular VPN Vendor container

We will be using an off the shelf VPN container here specifically geared for NordVPN, but the steps should be similar depending on your provider or configuration.

Setting up a VPN container (NordVPN)

On the Docker host running Kasm Workspaces (or Agent Server if using a Mult-Server Deploy) first we need to create a custom docker network:

docker network create \

--driver=bridge \

--opt icc=true \

--subnet=172.20.0.0/16 \

vpn-1

Now we can spinup a NordVPN Docker container:

docker run -d \

--cap-add NET_ADMIN \

--cap-add NET_RAW \

--name nord-vpn \

--net vpn-1 \

--ip 172.20.0.2 \

-e USER=YOURUSERNAME \

-e PASS='YOURPASSWORD' \

-e TECHNOLOGY=NordLynx \

--restart unless-stopped \

ghcr.io/bubuntux/nordvpn

Now that the VPN container is setup let’s move on to Customizing Workspaces

Option 3 Tailscale

Tailscale is a zero configuration VPN solution that allows users to quickly connect to a network of remote computers by their Tailscale IP addresses. Here we will be configuring a sidecar container to route traffic to the machines on your Tailscale network. It is important to note here we are not configuring an exit node here simply allowing configured VPN containers to route traffic out to your Tailscale network.

Getting your Tailscale auth key

For most users you can skip this step if you are trying to connect your Workspaces containers to existing infrastucture. If you are new to Tailscale you will need to sign up for an account with them here.

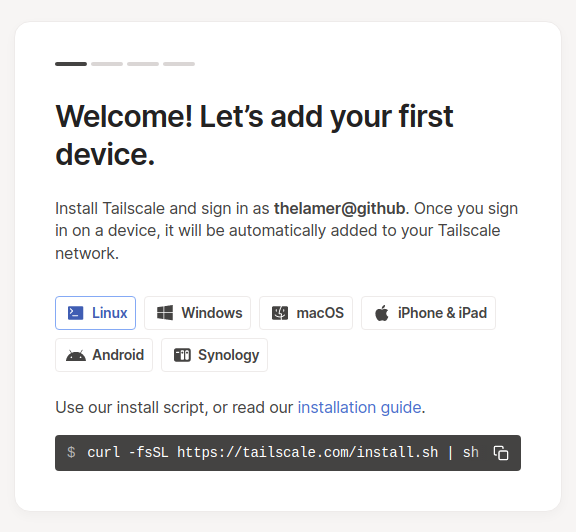

After signed up you will be presented with a login screen:

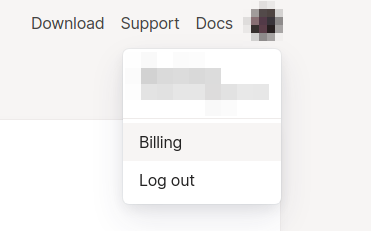

Ignore the setup wizard and click the avatar on the top right of the screen and select “Billing”:

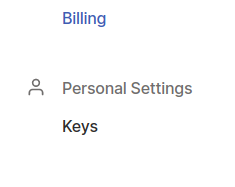

From here click on “Keys” under “Personal Settings”:

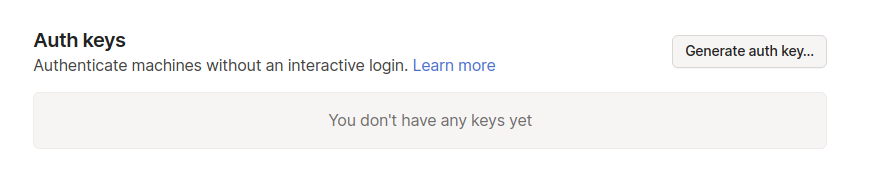

Click on “Generate auth key…”:

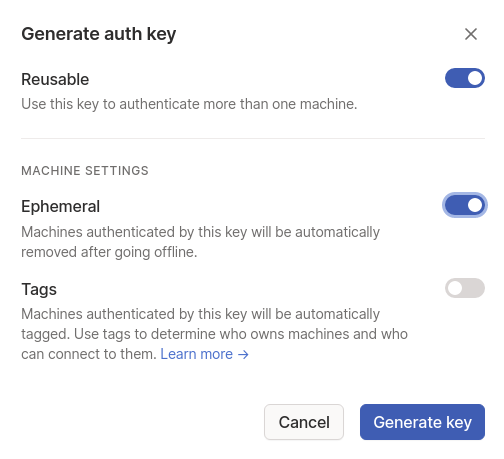

Now we recommend setting “Reusable” and “Ephemeral” but ultimately review the settings and decide for yourself:

Copy the key you have been given and move on to the next step.

Setting up a Tailscale Docker container

On your Kasm Workspaces host you will need to build a custom docker container to act as our VPN sidecar container.

mkdir tailscale

cd tailscale

mkdir root

Here is our Dockerfile:

FROM alpine:3.15

RUN \

apk add --no-cache \

bind-tools \

tailscale

# add local files

COPY /root /

ENTRYPOINT [ "/entrypoint.sh" ]

And our custom root/entrypoint.sh:

#! /bin/sh

# create tun device

if [ ! -c /dev/net/tun ]; then

mkdir -p /dev/net

mknod /dev/net/tun c 10 200

fi

# Enable devices MASQUERADE mode

iptables -t nat -A POSTROUTING -o eth+ -j MASQUERADE

iptables -t nat -A POSTROUTING -o tailscale+ -j MASQUERADE

# start vpn client

tailscaled

Make sure our entrypoint is executable:

chmod +x root/entrypoint.sh

Your folder should look like this:

tailscale/

├─ root/

│ ├─ entrypoint.sh

├─ Dockerfile

Now build the container:

sudo docker build -t tailscaled .

On the Docker host running Kasm Workspaces (or Agent Server if using a Mult-Server Deploy) we need to create a custom docker network:

sudo docker network create \

--driver=bridge \

--opt icc=true \

--subnet=172.20.0.0/16 \

vpn-1

Now we can spinup a Tailscale Docker container:

sudo docker run -d \

--cap-add NET_ADMIN \

--name tailscaled \

--net vpn-1 \

--ip 172.20.0.2 \

--restart unless-stopped \

tailscaled

Now login using your auth key:

sudo docker exec tailscaled tailscale up --authkey=<YOUR KEY FROM PREVIOUS STEP>

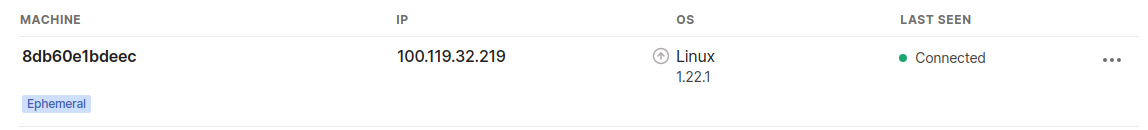

Now navigate here and ensure the machine is listed:

With the sidecar container running please move on to Customizing Workspaces

Customizing Workspaces

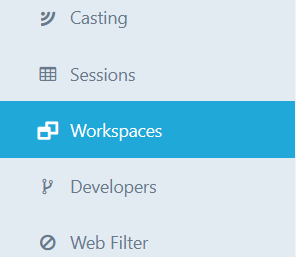

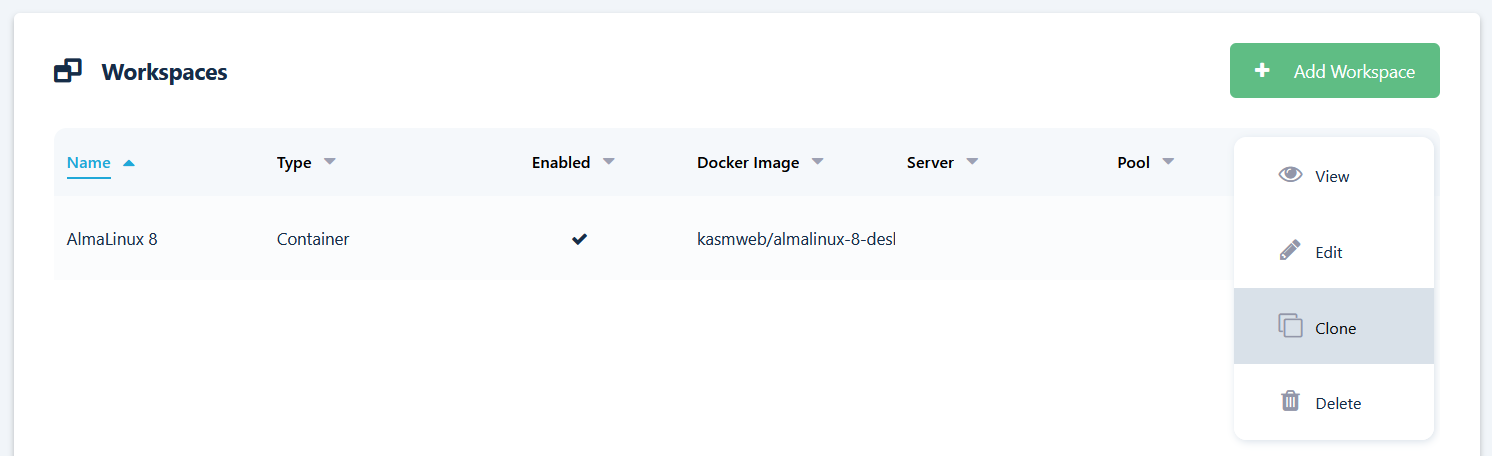

Login to the Workspaces web interface and click on “Workspaces” from the Admin tab:

Now select the settings button next to the Workspace you want to modify to use this network and select “Clone”:

In this example we will be modifying a CentOS 7 desktop Workspace.

First rename the Friendly Name to append that this is a special VPN enabled container CentOS 7 - VPN.

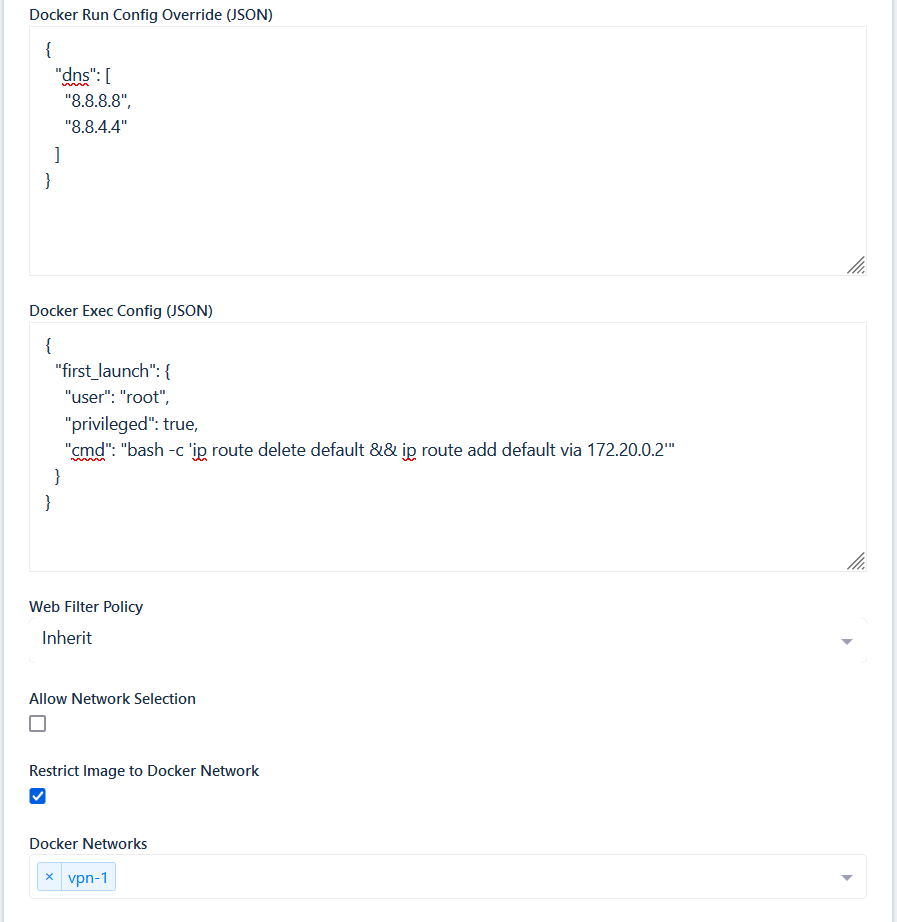

Next change the Docker Exec Config (JSON) to:

{"first_launch":{"user":"root","privileged":true,"cmd":"bash -c 'ip route delete default && ip route add default via 172.20.0.2'"}}

Then select “Restrict Image to Docker Network” and choose the network we created in the previous step.(vpn-1)

Once finished your settings should look something like this:

Click on submit and hop back over to the Workspaces tab and you should be presented with your new Workspace:

OK the Workspace is ready to deploy, the same process can be followed for any of our containers to pipe their network traffic through the VPN container.

In order to test your configuration please use a test that is applicable to your container and use case:

Testing

Testing your VPN in the container will vary depending on the provider or use case, but here are some tips.

In these examples we will be using the CentOS 7 - VPN Workspace we configured earlier.

Finding your public IP OpenVPN Nord

Click on Applications -> Terminal Emulator and enter:

curl icanhazip.com

Make sure the IP returned is not your current public IP.

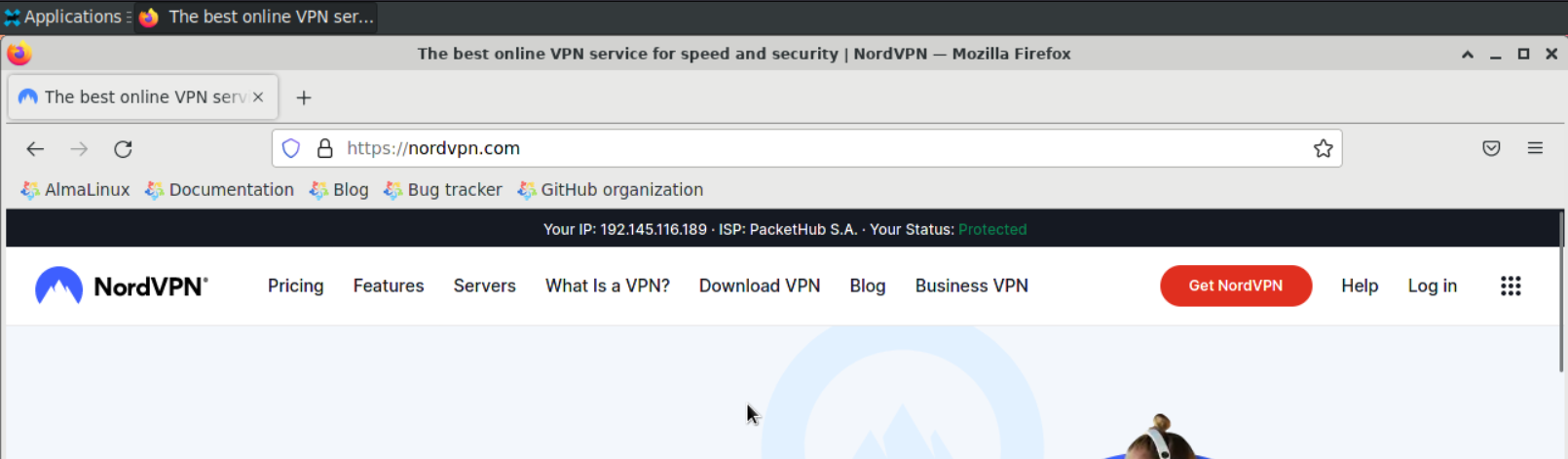

Nord VPN Testing

Most VPN providers will give some kind of indication that you are “protected” from their home page. Here we are using NordVPN, so lets open up Firefox from the desktop and navigate to https://nordvpn.com, you should see a Protected status:

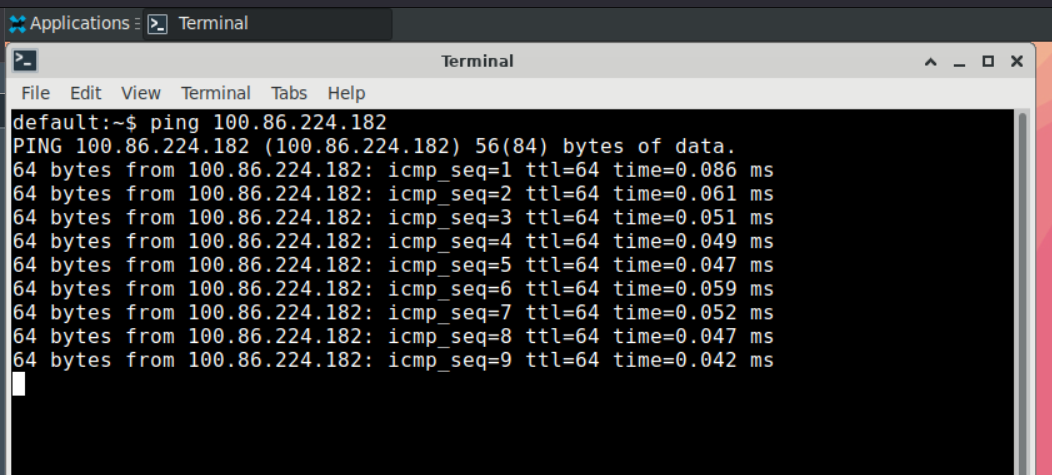

Tailscale testing

Here we are just going to run a simple ping to another device on our Tailscale network from the workspace. In order to test this you will need at least one other device connected to your network. From the Tailscale dashboard here you can see the IPs of your connected devices. In this example we will be using 100.103.71.235.

Here we are assuming you modified a desktop Workspace and in particular we are using the Centos 7 example from above. Once launched into the session go ahead and open a terminal from Applications -> Terminal Emulator:

Now run a ping command:

ping 100.103.71.235

You should see acks:

You are all set, any container with this configuration will have access to other machines on your Tailscale network.